Google is watermarking content created with generative AI.

And I think this is good news for content writers.

It could be very bad news for the companies who have gone all-in on AI-generated content.

Google's content watermarking system is called SynthID, and it's been a part of Gemini since early 2024. SynthID works by adjusting the probability of certain tokens appearing in images, video, or text.

I'm most interested in text watermarking.

To simplify, let's imagine tokens are chunks of words. When Gemini 'writes' something, it's embedding patterns that we don't notice.

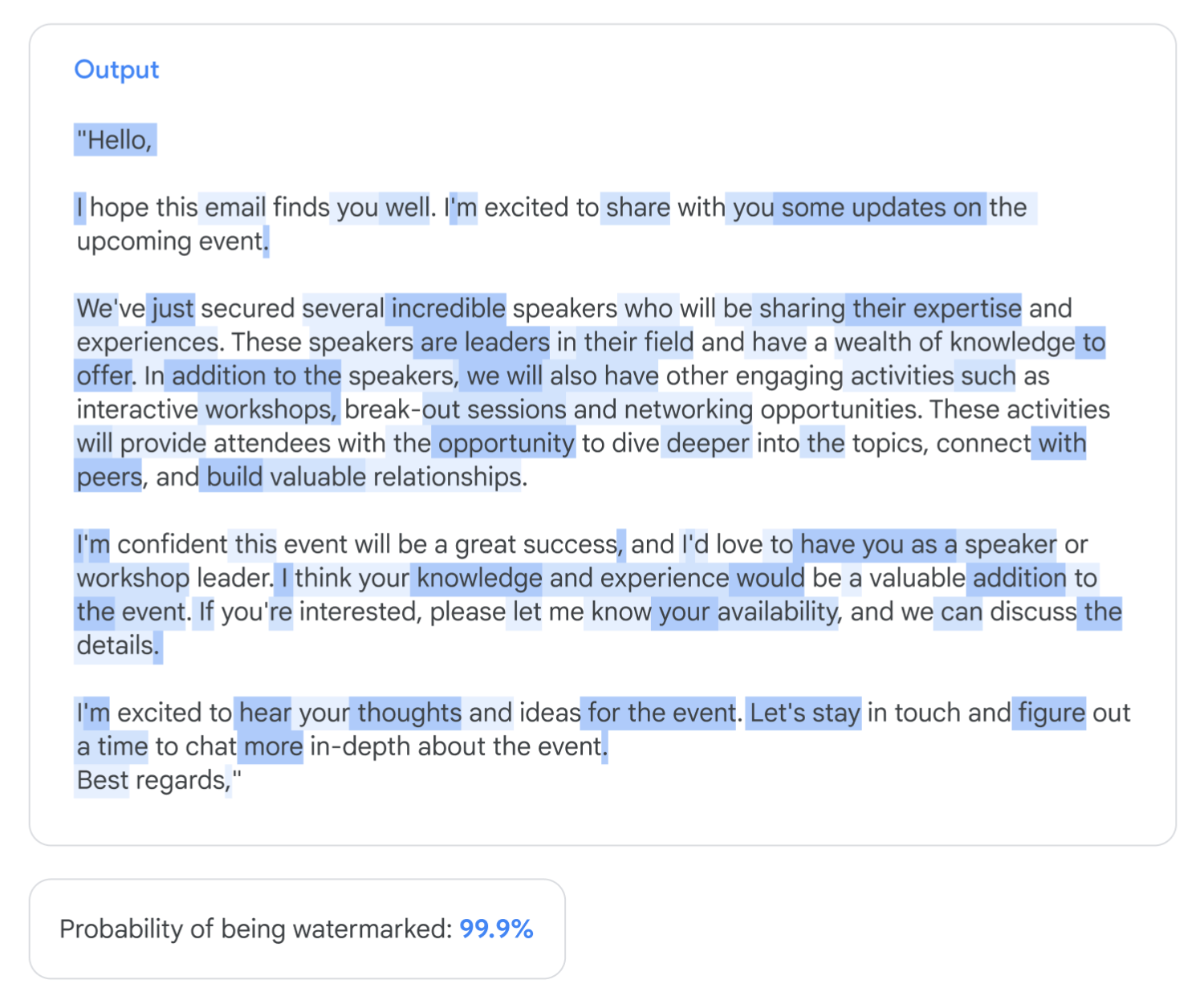

If you're curious, there's a version of SynthID on HuggingFace that lets you generate and compare watermarked and non-watermarked text.

Google is (theoretically) capable of labeling AI-generated images in Google Image Search as well. But that uses something called the "Coalition for Content Provenance and Authenticity (C2PA) technical standard".

I think SynthID is different, but I'll definitely be looking at it more closely in the next few weeks.

You might think that the best way around SynthID detection is to generate content in another tool. But from October 2024, SynthID has been open source. It's only a matter of time before more tools can generate watermarked content and detect it.

A couple of weeks ago, I wrote an article saying that AI detectors don't work. I think that's correct, for now. Perhaps it would be better to say that AI detectors don't work yet.

Google's guidelines say that it doesn't matter if your website content is generated by AI as long as it's "high-quality".

You might say that's an oxymoron, but that's not the point. Here's what I think: Google has the systems to detect AI-generated content in search results. It could do that any time it wants to. Maybe it already does.

Recently I attended a generative AI course with a few hundred attendees, all in marketing roles. The host talked about scraping hundreds of People Also Ask answers, generating the answers, and publishing them in bulk on the same day.

In my opinion, this is a gold standard example of what not to do in 2024. It's incredibly risky and could completely tank your site. The sheer volume of content you'd have to review and update would make it a difficult problem to solve if the worst happened.

Instead of teaching people how to use AI for generating content, responsible leaders should be augmenting the skills of talented content writers.

Here's the positive side. SynthID could be good news for content writers. Many freelancers are falsely accused of generating the content they send to clients, and it's no exaggeration to say that they're losing their livelihoods as a result.

And SynthID is bad news for the businesses who have published thousands of pages of "AI slop", ready to fall foul of the first AI detector that actually works.